Want to skip to the code? ClusterScrape is an example Elixir application to demonstrate one way of using BEAM’s baked-in clustering on AWS.

Background

Something we pride ourselves on at Ad Hoc is our preference to own the operations and maintenance for the things we build. We never want to be application developers that declare a thing finished and throw it over the wall for someone else to run. The tangible impact of this viewpoint is that we don’t build things for clients using technology we don’t know how to run in production. The risk of this approach is that our technology choices could stagnate. Research and Development (R&D) is one of the ways we mitigate that risk by evaluating tools outside of the client context.

Elixir is a technology I recently worked with while doing R&D. From the language’s site: “Elixir is a dynamic, functional language designed for building scalable and maintainable applications.” Elixir is a fairly recent language with Ruby-inspired syntax that runs on top of BEAM, the virtual machine that has powered Erlang applications since the early 1990s. This gives Elixir a foundation that includes tooling to build highly concurrent applications through performant support for immutable data structures, as well as OTP, the Open Telecom Platform, which provides idioms for building fault-tolerant systems.

One of the killer features that both Erlang and Elixir offer is simple work clustering via the rpc module. With that module it’s easy to run functions on other nodes using functions like parallel_eval/1 or pmap/3 (the <function>/<number> notation denotes the arity of a function, AKA the number of arguments it takes). The node a given application is running on, however, needs a way to know about what other nodes exist in the cluster. Commonly these sets of nodes are determined by providing lists of ips/hostnames on app boot, or via using multicast discovery. We deploy applications on AWS EC2 instances, using auto scaling across multiple availability zones, so neither of these approaches would work due to varying IPs on instances and the challenges of routing IP multicast. I set out to determine what it would take to make clustering work with the way we build and deploy applications.

Requirements

Build process

I wanted to build an example application that matched how we build applications for client work as closely as possible. We commonly use an approach where we build an app into an .rpm file, then bake that RPM onto an AMI to be deployed in AWS. I used Distillery to help with this, as it allows you to build a distributable version, including the Erlang and Elixir dependency chain, into a directory that can just be dropped onto a server.

Tooling

It can’t use any of the tooling we’ve built for clients. The tooling code we build is owned by our clients and it would be inappropriate to use it outside of that context. Building from the ground up, or at least a lower tooling baseline, is also a great empathy builder and helps cement appreciation for the great tools our ops engineers have built in a client context.

I chose to use a workflow where the app was built in an Amazon Linux Docker container locally, then baked onto an AMI using Packer. Using containers allowed for quicker iteration on my local development environment.

Node pools

Any given node needed a means of determining what its peer nodes were. This was achieved by using EC2 instance tags to flag instances as having a given release on them (so we don’t have functions being run across different versions of the app) and polling the aws ec2 describe-instances endpoint:

def poll do

# Get the list of running instances that match this release

{:ok, %{body: body}} =

ExAws.EC2.describe_instances(filters: ["tag:csrelease": System.get_env("RELEASE_HASH"), "instance-state-code": 16])

|> ExAws.request

{doc, _} =

body

|> :binary.bin_to_list

|> :xmerl_scan.string

# Extract the hostnames of the instances

chunks = :xmerl_xpath.string('/DescribeInstancesResponse/reservationSet/item/instancesSet/item/privateDnsName/text()', doc)

Enum.map(chunks, fn({:xmlText, _, _, _, thing, :text}) ->

String.to_atom("node@" <> to_string(thing))

end)

end

Results

The end result is ClusterScrape, an application that, given a properly configured AWS VPC, can create an auto scaling group, spin up instances in it, then delegate work across them. In the case of this application it simply calculates SHA256 checksums for web pages, but will use all the instances in the auto scaling group to do so.

This application is in no way polished or complete, but it did the job it set out to do. It proved to me that if we wanted to build an application in Elixir and take advantage of the tooling it offered, we could do so while still maintaining our standard for application building and deployment.

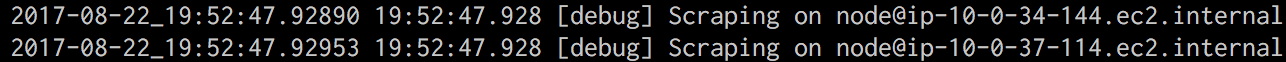

Example output:  Example logs:

Example logs: