The carrots and sticks of platform governance

In our first post on platform governance, we established the importance of finding a sweet spot that avoids giving teams too much autonomy or weighing them down with strict compliance hurdles. This is what we often refer to as the “carrot and stick approach” to governance, which should make building the right thing the easiest thing. Finding the right balance is easier said than done, of course. This is why we recommend taking an iterative approach to governance implementation to find a balance between the levels of written, automated, and manual enforcement that meet business and user needs without introducing unnecessary restrictions.

Let’s look at accessibility, for example. Section 508 requires all government digital services to meet a certain level of accessibility standards. That governance is written, but because there are just too many websites for a small group of auditors to review, it’s not explicitly enforced. Each organization is expected to understand and self-enforce those standards in the things they build. An organization may decide to rely on its teams to self-enforce accessibility practices, or it may choose to implement more oversight of its teams to ensure the delivered products are accessible. When an organization chooses to enforce accessibility standards in a more centralized way, it often means addressing this need from a few different angles, providing both incentives and enforcement measures.

Below, we’ll review some of those carrots and sticks you might lean on to ensure products using your platform meet your organization’s quality expectations.

Enforcement: the stick

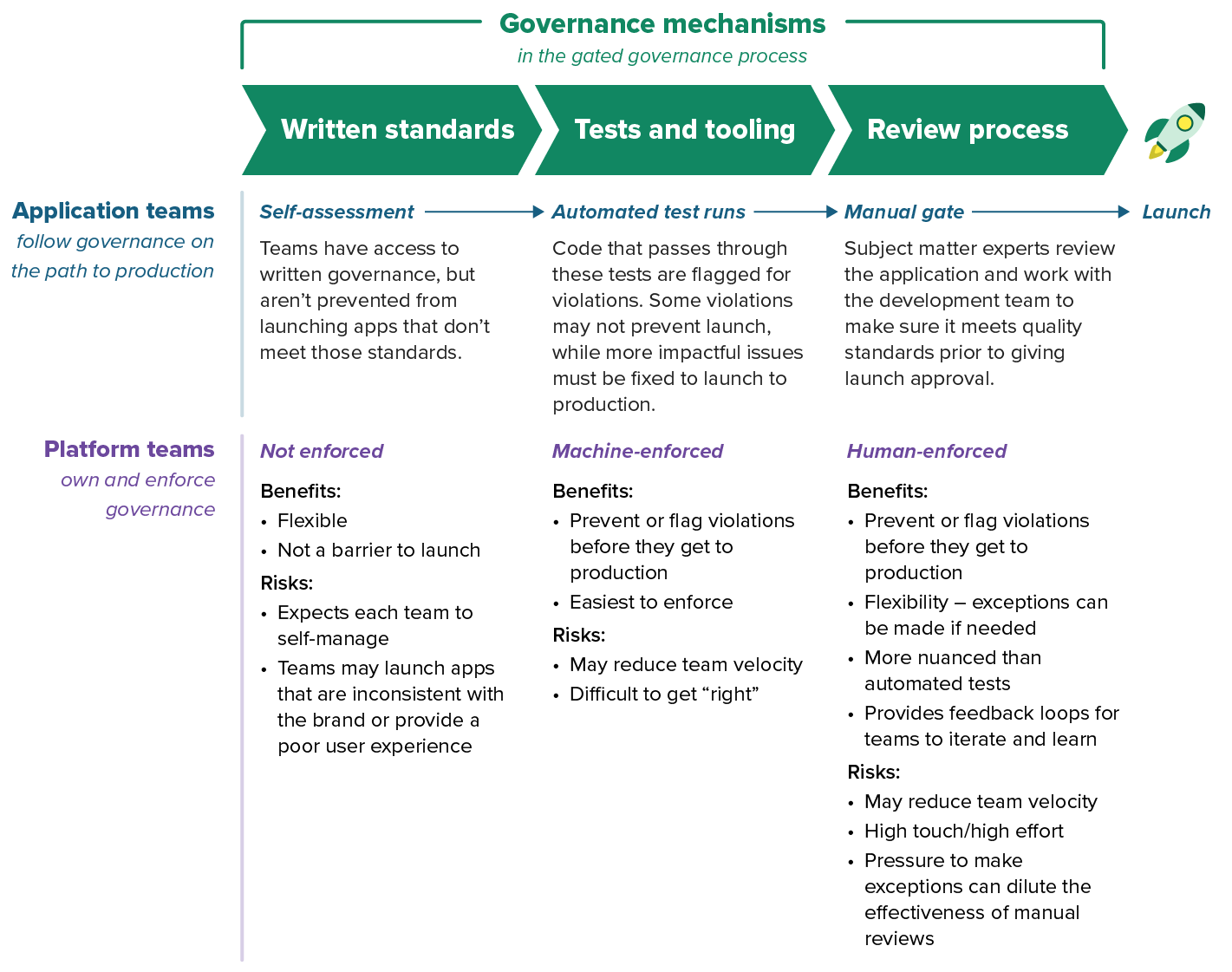

Policies may be written but not enforced, enforced by manual review/gates, or enforced with automation – or a combination of the three, depending on the need and resource availability.

Written guidance

The organization will have its own written standards that set the expectations for quality and how teams can meet those standards. The more clear that guidance is – and the more detail you can provide about how to comply with that guidance – the greater the chances that teams will be able to meet expectations on their own.

Example

The VA’s accessibility governance refers back to the federal Section 508 requirements, and the VA.gov platform supplements that standard with additional details on how that applies to teams building on their platform.

Written guidance on its own isn’t enforcement, however. It relies on both awareness of the policies and standards, and voluntary compliance of them. When governance is written but not enforced, we might consider them best practices.

Manual review and approval

Manual reviews can be used as a first step before implementing automated tests or as a supplement to automated checkpoints. For cases that require a human touch, a team can establish a manual review process to prevent teams from launching applications that don’t meet standards. You will find that a lot of governance – including that for accessibility, design consistency, and plain language – will require some level of human review.

Example

Google’s public-facing APIs all go through an API design review process before launch, applying standards around naming conventions, error messages, common design patterns, and more.

Automated testing

With automated testing and gating, an application may be forced to pass automated tests before it can be published to a production environment. Automated tests serve as a powerful gate because the tooling prevents teams from pushing code to production if it doesn’t meet predefined standards.

Example

The VA.gov platform’s automated build process tests for accessibility compliance on all static pages. If code fails the test, the team must fix the issues and try again.

The success of these methods will depend on how you implement them. If the application teams find your processes to be difficult, frustrating hurdles to scale, that discontent will lead to disillusionment with the process as a whole. Those responsible for enforcing governance will need to build strong relationships with the application teams. Leading with relationships and empathy for the extra work these requirements put on teams can help ease their frustration, keeping the working dynamic collaborative rather than combative. To do this well, the platform team should perform regular research with the developers using the platform to understand where the biggest bottlenecks are for teams and work to improve them.

Incentives: the carrot

A governance process with only enforcement levers can lead to poor developer experience, however. Enforcement levers are typically experienced as roadblocks, only creating more work for a development team on their way toward a shippable product. But a platform can counterbalance this experience by offering incentives to application teams, making the platform enticing to developers.

A platform that provides good incentives will be a joy to work with – which means happier developers, a quicker rate of adoption, and higher quality in the things those teams build. You want your developers to have a better experience using the new tools and services your platform provides than with their familiar, default toolset. If teams find that your platform’s scaffolding helps accelerate them toward production-ready applications and minimizes the pain of compliance requirements, they’ll be excited about your platform and more likely to accept the enforcement requirements.

When your platform guides development teams to meet governance requirements as the default, you have made building the right thing the easiest thing.

Let’s dive into some examples of incentives you can implement.

Automated compliance controls

When the platform handles concerns like security and compliance paperwork based on common infrastructure, that’s a major tedious launch requirement off your team’s plate.

Example

Kessel Run, the US Air Force’s platform for standardizing DevSecOps processes and infrastructure, has had widespread success in large part due to integrating continuous ATO, which dramatically simplifies complex compliance requirements for applications.

Code samples

Documentation that includes working code samples to demonstrate how to use a component or service makes it easier for developers to use it successfully. Google’s research on API usability highlights the importance of working code samples for a new developer using an API, for example.

Example

The U.S. Web Design System provides accessible, brand-compliant code snippets and components for developers.

Starter kits

Similar to code samples, starter kits may give developers an entire working “hello world” application. This can be a compelling way to demonstrate how to use several parts of your platform at once, baking “the right thing” into sample code to help teams ramp up quickly. If your starter kit’s code already complies with governance requirements, application teams will have a head start toward successfully meeting those requirements in their final product.

Example

Google’s App Engine product provides entire working “hello world” applications that demonstrate a range of the platform’s services. Being able to deploy these with low effort gives developers an easy, fully working starting point they can build upon.

Training

Part of making the right thing the easiest thing comes down to awareness. Developers have to keep a lot of business and user needs top of mind as they build, in addition to coding best practices. With so many streams of knowledge required to build the right thing, many developers just aren’t aware or practiced in all the governance requirements their app needs to meet. A platform team can make the unknowns known by offering training on crucial governance topics.

Example

Digital Services Georgia (DSGa) provides content managers using their GovHub content management platform with a variety of training options, including analytics, accessibility, and plain language training.

Customer support

Even after training, it’s not realistic to expect a team to get everything right immediately. Technical systems are nuanced and complex, and everyone needs help sometimes. Friendly, prompt attention from a platform team dedicated to helping app teams be successful are an essential part of a recipe for platform success.

Layers of governance – from written to coded, and everything in between

As shown in our examples above, there are many ways to meet quality standards and incentivize platform adoption. Your approach may vary over time, establishing different levels of nuance to ensure quality, and can be layered to complement each other and improve the process.

No platform will start out with mature governance processes. They’re to be evolved and iterated upon over time, adding features as the platform matures. The key to success is to include a governance strategy as part of the human-centered design and product management strategy.

Related posts

- The building blocks of platforms

- Why defining your product is an essential question in government digital services

- Building a mentorship program for product managers

- Introducing the Ad Hoc Product Field Guide

- Making the right thing the easiest thing: human-centered governance for platform adoption

- My view of OpsCamp